Stacking technology is a type of technology used to expand ports on Ethernet switches. It is a non-standard technology. Mixed stacking is not supported between manufacturers, and the stacking mode has also been developed by each manufacturer with private standards.

Stacking technology is widely used as a common access method in large-scale Internet data center architectures. Years of practice have proved that stacking technology has advantages and disadvantages in high-reliability network architecture scenarios. With the development of business and the improvement of business quality requirements, especially in public cloud scenarios, businesses are required to provide 7×24 hours of uninterrupted service, and the compensation caused by business interruption is at least 1:100. Therefore, it is particularly important to solve the disadvantages of stacking.

Next, let’s talk about the pros and cons of stacking technology and a way to “de” stacking technology.

The benefits of stacking

1. Simplified management

When multiple switches in the network are virtualized into one device through stacking, network operation and maintenance personnel only need to manage one device instead of multiple devices. Although the number of ports that need to be managed has not decreased, the operation time of frequently logging into different devices has been reduced, and some corresponding port configurations have also changed, which simplifies the overall management cost. As shown in Figure 1:

▲ Figure 1

Originally, network management required the maintenance of four network devices. After stacking the access layer devices through stacking technology, now only one network device needs to be maintained.

2. Elastic expansion

Port quantity expansion

When the number of access terminals increases to the point where the port density of the original switch cannot meet the access requirements, you can add a new switch to form a stacking system with the original switch, as shown in Figure 2:

▲ Figure 2

System processing capacity expansion

When the forwarding capacity of the core switch cannot meet the demand, you can add a new switch to form a stacking system with the original switch, as shown in Figure 3:

▲ Figure 3

3. High reliability

High reliability of equipment

Due to cost control issues, box-type devices are generally designed with only a single CPU and a single switching chip. When a single CPU fails, the entire device will become unavailable, which is a high risk. If stacking technology is used, it is equivalent to forming a frame-type device, and at the same time has more spare main control boards and interface boards, as shown in Figure 4:

▲ Figure 4

The Master in the stack is equivalent to the main control board of the virtual device, and the Slave device is comparable to the backup main control board.

High network reliability

The stacking system supports two physical topologies: chain connection and ring connection, as shown in Figure 5:

▲ Figure 5

Chain stacking requires at least one less stacking line than ring stacking, which is more cost-effective.

Ring stacking has higher availability because the stacking system will not be disrupted if any line is disconnected.

The Disadvantages of Stacking

1. Software Risks

The stacking system software upgrade has always been a topic that cannot be avoided in stacking technology. No matter which manufacturer's equipment is from, there is no guarantee that the software system does not have bugs. Once a bug occurs, the software needs to be upgraded. Although there are technologies such as ISSU that can achieve non-interruption upgrades, the scope of application of ISSU is limited. In the case of a very small gap between the two versions, conventional switch system upgrades require multiple stacked devices to restart at the same time. Therefore, it is inevitable that the business will be interrupted for a while. It is for this reason that "de-stacking" in data centers has become a trend.

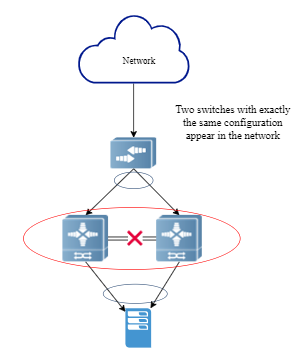

2. Risk of Split

When the stacking line connecting the switches fails or is abnormal, the stack will split. Although it is not common, it still happens in actual operation. The problem is that after the split, it is equivalent to two switches with the same configuration appearing in the network, causing network configuration conflicts and ultimately interrupting the services carried by the stacking system. As shown in Figure 6:

▲ Figure 6

There is a solution to this problem. Once the system detects a split, it shuts down all ports except the stacking port, management port, and the exception port specified by the administrator to prevent the network from being affected after the split. Although this method avoids the situation where two switches with the same configuration appear in the network, the cost is obvious. The service recovery operation becomes complicated and the remote recovery operation becomes extremely difficult.

A way to "de-stack"

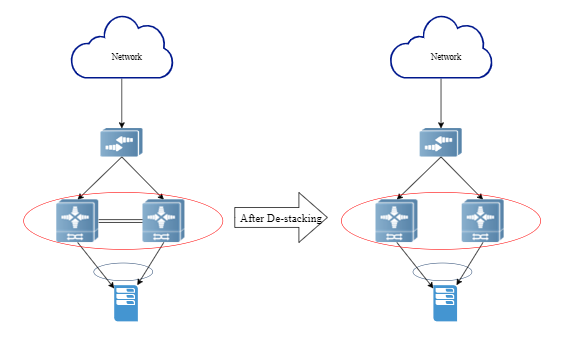

Next, we will introduce a "de-stacking" implementation method - VSU-Lite, as shown in Figure 7:

▲ Figure 7

1. "De-stack” networking function implementation

The server still uses dual network cards and dual uplinks to two switches, and the network card mode is set to bond mode 4, which means using IEEE 802.3ad (dynamic link aggregation).

(Note: Since the ARP information of the aggregation port will only be forwarded to one physical port, in the "de-stacking" scenario, both switches need to receive the ARP request from the server, so the server needs to make a driver adjustment, which will not be discussed here. )

Since the server uses the LACP protocol, to ensure the normal operation of the protocol, when the two access switches are not connected, we use a “deceptive” method to make the server “think “that the upstream device is still one device instead of two independent devices. At this time, we need to make some small "modifications” to the switch configuration:

● The LACP System ID is set to the same. Because the System ID is composed of the system priority and the system MAC, you need to manually adjust the System ID to be consistent.

● Add the Device ID to the LACP Port ID (manual configuration) so that the server sees different LACP member port numbers;

● The gateway MAC address is configured to be the same;

● Enable ARP-to-host routing and redistribute it to the main network through routing protocols such as OSPF and BGP.

Through the above simple configurations, the server will think that it has successfully connected to a LACP aggregation group. At the same time, the gateway is set as an access switch, thus realizing the "de-stacking" of the data center network architecture.

2. Optimize function implementation

Broadcast suppression

The switch turns on ARP proxy, converts Layer 2 forwarding to Layer 3 forwarding, and suppresses broadcasts.

Silent server "activation”

The switch enables the ARP scanning function to prevent the silent server from being unable to access the external network.

Uplink port monitoring

The switch enables monitoring on the upstream port and closes the downstream port after all upstream ports are disconnected to avoid upstream traffic packet loss. To prevent frequent network changes caused by port oscillation, you can also set the downstream port to open with a delay.

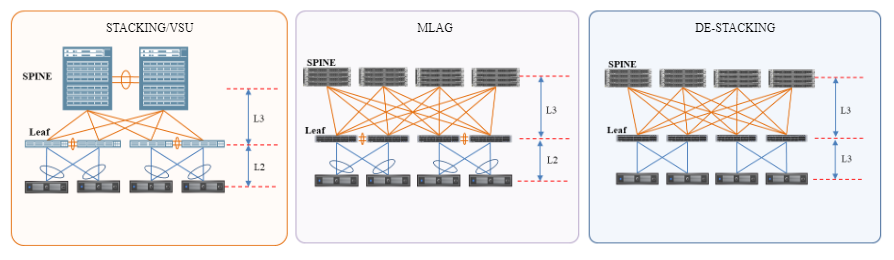

Comparison

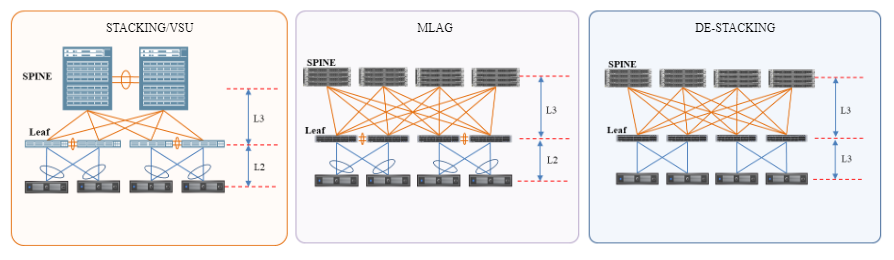

The difference between the architectures of stacking, MLAG and De-stacking is presented in the figure below. In addition a concise comparison is presented below in table 1.

▲ Figure 8

| Comparison |

Stacking |

MLAG |

De-stacking |

| Reliability |

Low, A-S active and standby operation, Maintenance status through software heartbeat; The CPU is affected, and the state and entry information need to be synchronized, and there is a risk of brain split/double master miskill. |

Medium: Dual Active run Protocol-level coupling, maintaining state through software heartbeat; You still need to synchronize status and entry information. |

High: Dual Active run No horizontal connection, full CPU performance, no stack split risk, no status, and entry synchronization. |

| Configuration complexity |

Medium, It involves system configuration, which needs to configure VLS links, primary and backup management, etc. |

High: Involving control surface coupling, need to configure information synchronization, state detection, etc. (nearly 30 configurations per device). |

Medium: There is no synchronization or detection content, and virtual LACP, ARP transfer host routing, Monitor Link, etc. are configured. |

| Up gradation risks |

High: There are version compatibility issues, minute level upgrade, the whole group restart will cause business interruption. |

Low: The upgrade of one device does not affect the other device. The upgrade time of the terminal is short, in seconds. |

Low: The upgrade of one device does not affect the other device. The upgrade time of the terminal is short, in seconds. |

| Server configuration |

No additional configuration required. |

No additional configuration required. |

The server NIC is configured with mode4 and supports the dual-send ARP function. |

Summary

A way to achieve "de-stacking" in the data center network architecture. Ruijie Networks data center switch products use VSU-Lite technology to Support the implementation of "de-stacking" architecture, and completely solve the disadvantages caused by stacking while keeping the server access mode unchanged. Of course, “de-stacking" can also be achieved through other technical means.