Network Load Balancing in Data Centers: An Overview

Challenges of Network Load Balancing

1. Traffic Dynamics: Data centre networks experience dynamic traffic patterns, where a limited number of substantial flows can dominate the network load, while numerous smaller flows may induce significant fluctuations in the network state. The latency associated with flow scheduling complicates the problem of load balancing in these environments.

2. Congestion Perception Difficulty: The high level of dynamism in data centre network traffic results in a temporal delay in the perception of network congestion. The congestion information available at any given moment reflects the previous state of the network. Consequently, the accuracy and timeliness of this congestion perception directly influence the efficacy of load-balancing strategies.

3. Packet Out of Order Issues: Traditional load balancing techniques within data centre networks typically rely on flow scheduling and hash calculations to select a single path for communication. When two data streams conflict on the same link, the transmission time can effectively double. Moreover, utilizing packet-level scheduling can lead to packet out-of-order problems due to the acknowledgement mechanisms inherent in transport layer protocols.

4. Abnormal Traffic Scheduling: In the event of a network device or link failures, the uplink and downlink may exhibit asymmetry, thereby contributing to network congestion and significantly diminishing data transmission efficiency. It is imperative for load-balancing solutions to promptly address such failure conditions and to redistribute affected traffic within the network to optimize overall transmission performance.

The challenge of multi-link load balancing within data centre networks

ECMP refers to the practice of equivalent multi-path routing, which involves the availability of multiple paths with equivalent costs leading to the same destination address. In environments that support equivalent routing, Layer 3 forwarding traffic directed towards a specific destination IP or network segment is capable of being shared across different paths, thereby facilitating network link load balancing. Several methods exist to implement a path selection strategy that allows for prompt switching of ECMP in the event of a link failure:

1. HASH: This method employs a calculation based on IP quintuples to determine and select a specific path for the data flow.

By utilizing these strategies, data centres can achieve more efficient load balancing and overall improved network performance.

ECMP (Equal-Cost Multi-Path) is a relatively straightforward load-balancing strategy. However, it presents several challenges in practical application:

1. HASH Polarization Issue

2. HASH Consistency Issue

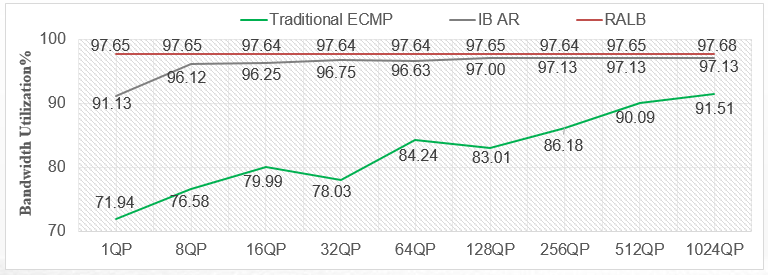

3. Static HASH Imbalance Issue

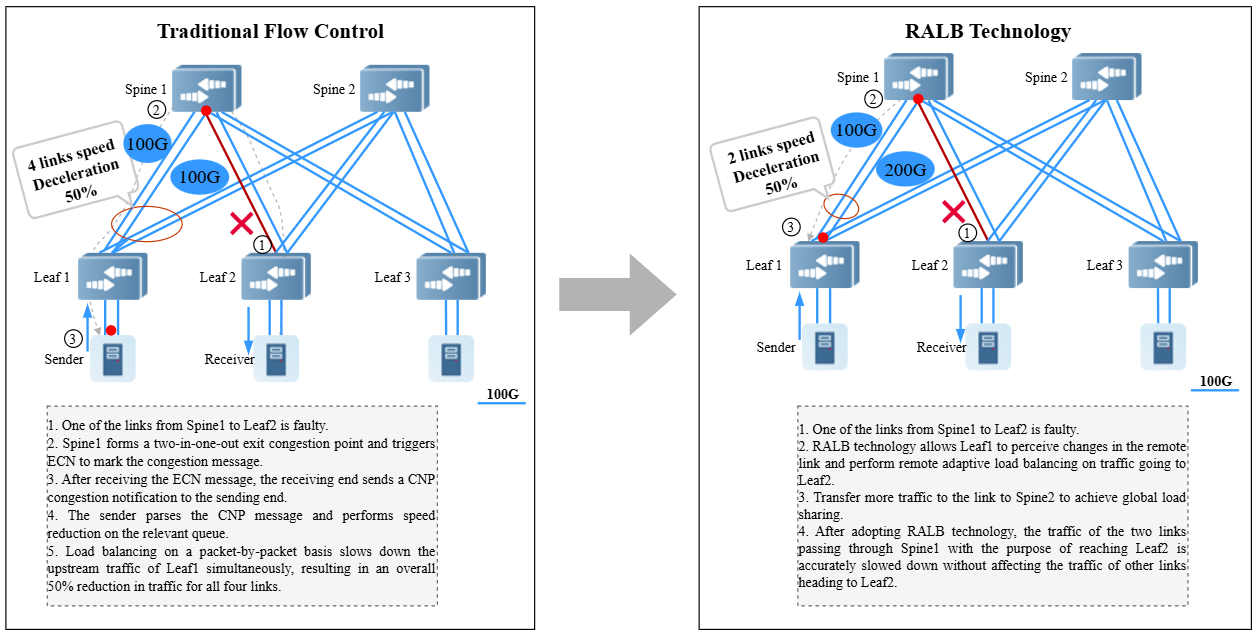

In traditional Ethernet-based data centre networks, achieving load balancing through ECMP is effective in scenarios characterized by numerous small flows. However, the flow characteristics associated with artificial intelligence (AI) training often involve substantial concurrent large flows, which may introduce long-tail delays stemming from uneven hashing. This dynamic poses a challenge to maintaining training efficiency and supporting large-scale machine learning initiatives within an Ethernet framework.

Ruijie RALB Load Balancing Technology

● Dynamic Load Balancing at the Packet Level

Static HASH employs a flow-based load-balancing mechanism. The HASH selection process follows an evaluation of Equal-Cost Multi-Path (ECMP), whereby one of the links in the designated group is utilized for data forwarding. In contrast, dynamic load balancing utilizes a per-packet forwarding approach, which divides the flow into smaller segments. Data packets are forwarded based on the current load conditions of each link within the ECMP framework. Consequently, in scenarios involving Artificial Intelligence training utilizing RALB technology, both Read and Write data packets are partitioned into smaller entities, facilitating per-packet dynamic load balancing. Conversely, for other message types, static per-flow methods are retained. This strategy enables the attainment of enhanced bandwidth utilization.

● Global Load Balancing

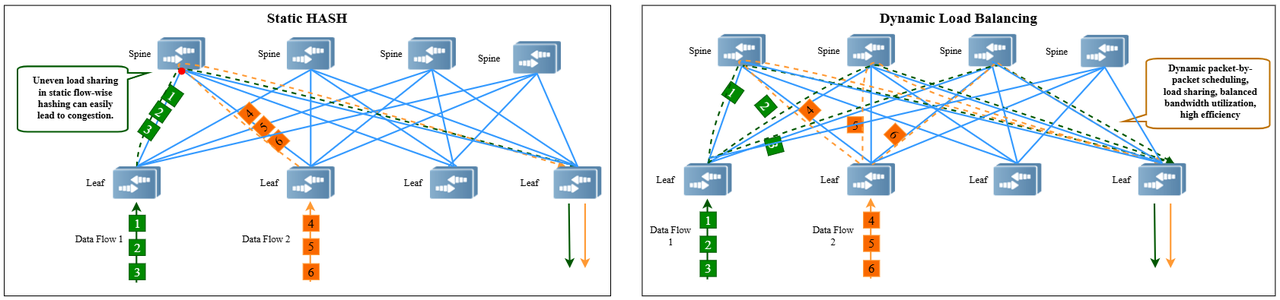

In the event of a link failure within a data centre network, such as the failure of an optical module, switch port malfunction, or disconnection of a fibre link, an asymmetrical condition arises between the uplinks and downlinks of the switch. This scenario may result in congestion at the switch's egress port. During instances of queue congestion, the switch marks affected packets with Explicit Congestion Notification (ECN). Upon receiving these ECN-marked packets, the receiving end generates a Congestion Notification Packet (CNP) to communicate the congestion back to the sending end. In response, the sender is prompted to throttle the relevant priority queue.

For illustrative purposes, consider the case where one of the links connecting Spine1 to Leaf2 is compromised. Under these circumstances, with only a single 100G link between Spine1 and Leaf2, traffic traversing from Leaf1 to Leaf2 via Spine1 will result in egress congestion at Leaf1. Consequently, the ingestion rate at Leaf1 must be throttled to 100G to mitigate congestion. Notably, such throttling affects all outgoing traffic simultaneously due to per-packet load balancing, leading to a reduction in upstream traffic on Leaf1. In a scenario where traffic from Leaf1 to Leaf2 is effectively balanced across Spine1 and Spine2, it follows that traffic routed via Spine2 will also be throttled to 100G, thereby presenting an aggregate bandwidth utilization of only 200G.

Through the implementation of Remote Adaptive Load Balancing (RALB) technology, the Spine switch is equipped to swiftly detect changes in the link status and promptly inform the Top of Rack (TOR) switch. This functionality enables Leaf1 to adapt to the alterations in the remote link, facilitating an adaptive redistribution of traffic directed toward Leaf2 and promoting global load balancing. Following the integration of RALB technology, Leaf1 efficiently allocates only one-third of the traffic to Leaf2 through Spine1, while the remaining two-thirds is forwarded via Spine2. This adjustment allows for optimal utilization of the available 300G bandwidth from Leaf1 to Leaf2, resulting in a 50% increase relative to the previous maximum of 200G. The efficacy of this technology is further evidenced as the number of Spine switches increases—escalating from 3/6 to 5/6 with three Spine switches, and from 4/8 to 7/8 with four Spine switches.

Moreover, once RALB technology is implemented, proactive flow control can be initiated on Leaf1, significantly contributing to reduced latency within the network.

Ruijie Networks' RALB load-balancing technology transcends the limitations imposed by conventional practices. Leveraging the current load status of links, it executes global traffic balancing and, in conjunction with dynamic packet-by-packet forwarding, achieves a non-blocking network environment characterized by ultra-high bandwidth utilization. This advancement markedly enhances the efficiency and stability of data transmission, thereby providing robust support for the effective operation of data centres.

In light of the escalating global Internet traffic and the increasingly diverse requirements of data applications, Ruijie Networks is dedicated to advancing and innovating network technology. The introduction of their global load-balancing solution serves as a testament to their relentless pursuit of progress. Through ongoing research and development, as well as product innovation, Ruijie Networks is poised to continue delivering efficient, reliable, and intelligent network solutions for data centres worldwide. The emergence of the AIGC era will significantly facilitate rapid development across various sectors within the Internet enterprise landscape.

Related Blogs:

Exploration of Data Center Automated Operation and Maintenance Technology: Zero Configuration of Switches

Technology Feast | How to De-Stack Data Center Network Architecture

Technology Feast | A Brief Discussion on 100G Optical Modules in Data Centers

Research on the Application of Equal Cost Multi-Path (ECMP) Technology in Data Center Networks

Technology Feast | How to build a lossless network for RDMA

Technology Feast | Distributed VXLAN Implementation Solution Based on EVPN

Exploration of Data Center Automated Operation and Maintenance Technology: NETCONF

Technical Feast | A Brief Analysis of MMU Waterline Settings in RDMA Network

Technology Feast | Internet Data Center Network 25G Network Architecture Design

Technology Feast | The "Giant Sword" of Data Center Network Operation and Maintenance

Technology Feast: Routing Protocol Selection for Large Data Centre Networks

Technology Feast | BGP Routing Protocol Planning for Large Data Centres

Technology Feast | Talk about the next generation 25G/100G data centre network

Technology Feast | Ruijie Data Center Switch ACL Service TCAM Resource Evaluation Guide

Silicon Photonics Illuminates the Path to Sustainable Development for Data Centre Networks

How CXL Technology Solves Memory Problems in Data Centres (Part 1)

CXL 3.0: Solving New Memory Problems in Data Centres (Part 2)

Featured blogs