Delay Issues with CXL Technology

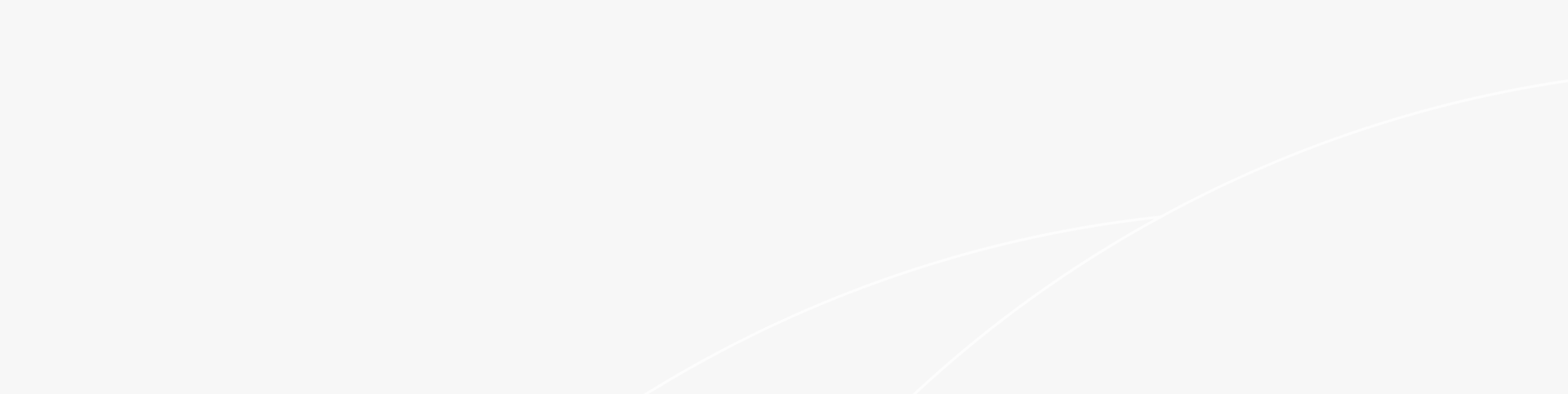

At the previous Hot Chips event, the CXL Alliance presented specific latency figures associated with CXL technology. The memory latency of CXL, independent of the CPU, ranges from approximately 170 to 250 nanoseconds, which is higher than that observed in non-volatile memory (NVM), disaggregated memory over network connections, solid-state drives (SSDs), and hard disk drives (HDDs), all of which are also independent of the CPU.

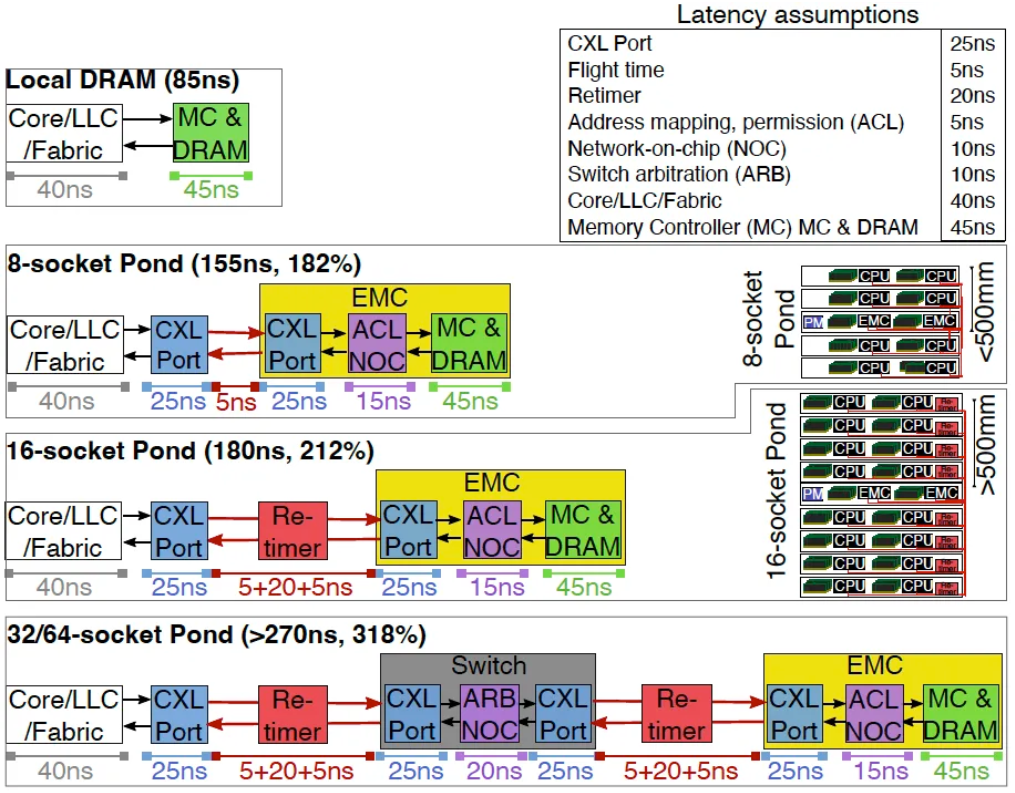

A report from Microsoft Azure indicates that CXL memory exhibits a latency gap relative to other CPU memory, caches, and registers, reported to be between 180% and 320%. For detailed latency values and proportions in various scenarios, please refer to the comprehensive introduction illustrated in the accompanying figure. Different application systems demonstrate varying sensitivities to latency, particularly applications that are latency-sensitive. The figure illustrates the effects of latency discrepancies across diverse CXL networking schemes on application performance. In scenarios lacking optimization, the overarching trend suggests a decline in performance across an increasing number of applications, although the precise percentage will require specific evaluation.

Looking ahead, the industry anticipates a significant improvement in CXL memory latency issues with the widespread implementation of PCIe 7.0/CXL 3.0; however, this is unlikely to occur before 2024-2025. In the interim, it remains possible to enhance performance by utilizing improved memory system management, scheduling, and monitoring software to mitigate the latency discrepancies between local and remote CXL memory.

Solution for delay in CXL technology

To enhance performance within such systems, Meta has undertaken an exploration of an alternative approach to memory page management, departing from conventional local DRAM practices. This approach aims to address the thermal characteristics of memory pages through a Linux kernel extension known as Transparent Page Placement (TPP). Acknowledging the latency disparity between CXL main memory and local memory—as is common in distributed storage systems employing tiered management for flash and hard disk drives—Meta introduced the Multi-Tier Memory concept in its TPP paper. This framework seeks to assess the volume of data residing in memory, distinguishing between hot, warm, and cold data based on varying levels of activity. Subsequently, it identifies mechanisms for positioning hot data in the fastest memory, cold data in the slowest memory, and warm data in the intermediate memory.

The TPP design space encompasses four primary domains:

Meta's TPP represents a kernel-mode solution leveraging transparent monitoring and placement of page temperature. This protocol operates in conjunction with Meta's Chameleon memory tracking tool, functioning within the Linux user space, thereby facilitating the performance tracking of CXL memory across applications. Meta has assessed TPP using various production workloads, optimizing the placement of "hotter" pages in local memory while relocating "colder" pages from CXL memory. The findings demonstrate that TPP can enhance performance by approximately 18% in comparison to applications running on the default Linux configuration. Furthermore, TPP shows improvements ranging from 5% to 17% relative to two leading existing techniques for tiered memory management: NUMA balancing and automatic tiering.

|

Workload/Throughput (%) (normalized to Baseline)

|

Default Linux

|

TPP

|

NUMA Balancing

|

Auto Tiering

|

|

Web1 (2:1)

|

83.5

|

99.5

|

82.8

|

87.0

|

|

Cache1 (2:1)

|

97.0

|

99.9

|

93.7

|

92.5

|

|

Cache1 (1:4)

|

86.0

|

99.5

|

90.0

|

Fails

|

|

Cache2 (2:1)

|

98.0

|

99.6

|

94.2

|

94.6

|

|

Cache2 (1:4)

|

82.0

|

95.0

|

78.0

|

Fails

|

|

Data Warehouse (2:1)

|

99.3

|

99.5

|

-

|

-

|

CXL 3.0: Enters Heterogeneous Interconnection

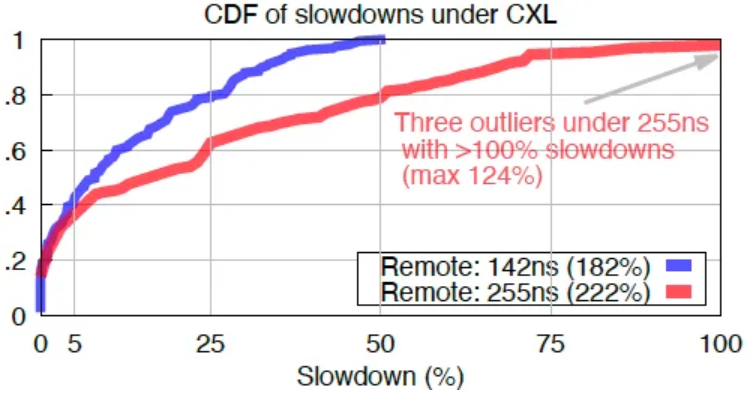

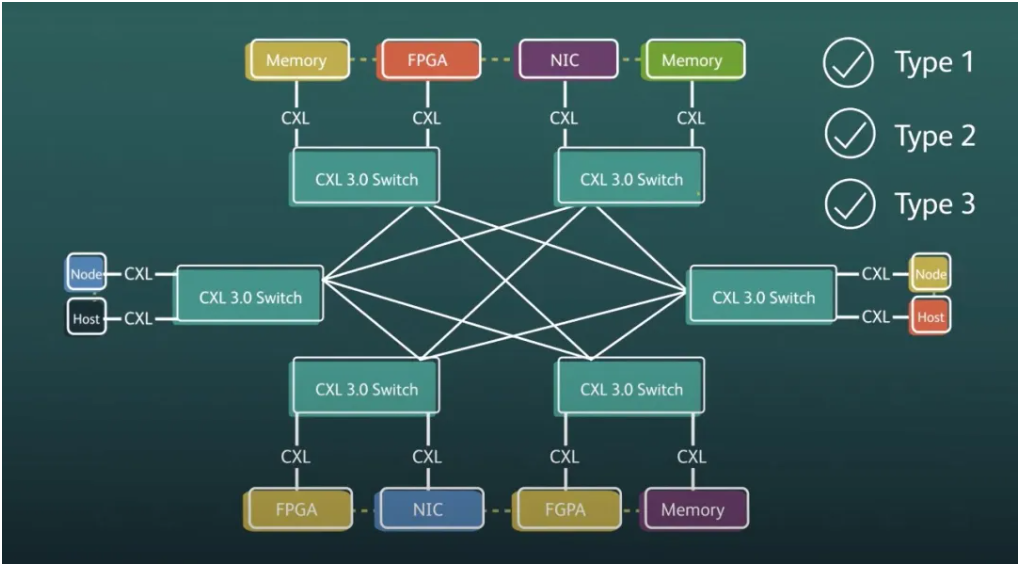

With CXL 3.0, the switch now supports a wider array of topological configurations. Unlike CXL 2.0, which primarily allowed for fan-out using CXL.mem devices—where the host accessed memory resources from external devices—CXL 3.0 permits the utilization of Spine/Leaf and other topological networks across one or multiple server racks. Furthermore, the theoretical limitation on the number of switch ports for CXL 3.0 equipment, host machines, and switches has been increased to 4,096. These developments significantly enhance the potential scalability of CXL networks, expanding capabilities from individual servers to comprehensive server rack infrastructures.

CXL 3.0—Fabric Capability:

CXL 3.0: Better Scalability and Higher Resource Utilization:

● Support for Multi-Level Switching

● Enhanced Consistency

● Improved Software Functionality

Under CXL 2.0, only one processing device could be supported downstream from the root port. However, CXL 3.0 has fully removed these constraints, allowing the CXL root port to support a complete mixed configuration of Type-1, Type-2, and Type-3 devices. This advancement facilitates the connection of multiple accelerators to a single switch, thereby increasing density (more accelerators per host) and enhancing the utility of the new point-to-point transmission capabilities.

CXL 3.0 - Key Characteristics:

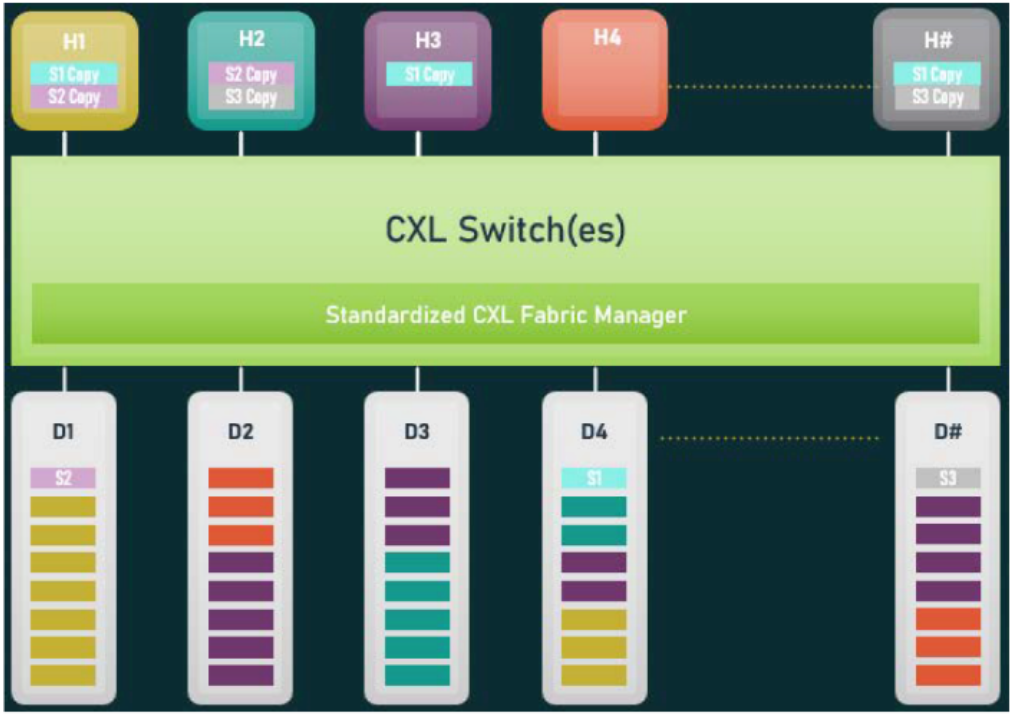

The introduction of memory sharing and direct device-to-device communication capabilities in CXL 3.0 significantly improves the communication dynamics within heterogeneous networks. This advancement enables the host CPU and various devices to collaborate on the same dataset, thereby minimizing unnecessary data disruption and duplication. For instance, as illustrated in the accompanying figure, Host 1, Host 3, and other relevant devices share a common data copy, denoted as S1; Host 1 and Host 2 share another copy, referred to as S2; while Host 2 and Host # access yet another shared data copy, labelled S3.

One notable instance of data sharing in contemporary environments is evident in the application of artificial intelligence workloads, such as the deep learning recommendation systems developed by technology giants like Google and Meta. These systems, characterized by billions of parameters, often replicate model data across numerous servers. Once a user request is received, the inference operation is initiated. With the implementation of CXL 3.0 memory sharing, substantial AI models can reside in various central memory devices, facilitating access from multiple devices. This setup allows for the continuous training of models utilizing real-time mobile user data, with potential updates disseminated across the CXL Exchange network. Such advancements can significantly decrease DRAM costs and enhance performance by an order of magnitude.

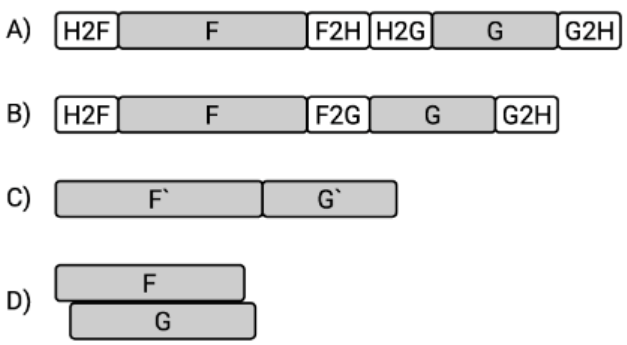

Furthermore, the utilization of CXL 3.0 enhances consistency features, thereby achieving loose coupling among heterogeneous hardware accelerators, and enabling collaborative functionality. In this context, we leverage CXL 3.0 for GPU and FPGA memory sharing. The direct bypass of the PCIe bus allows for effective data communication and joint completion of mathematical operations. For instance, an FPGA is tasked with decompressing a file comprising 1.6GB of double-precision values, which the GPU subsequently employs to perform integration calculations on the input data. This underscores the interaction patterns of workloads within anticipated future heterogeneous systems, where FPGA demonstrates significant performance advantages for sequential and pipelined computations, while the GPU excels in branching and memory stride calculations.

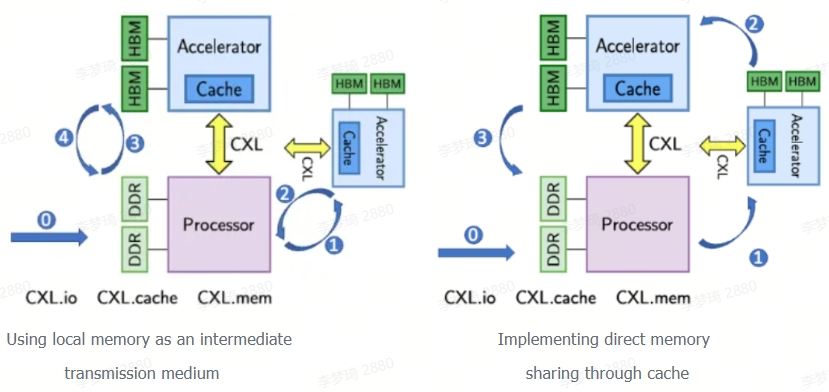

Current practices with PCIe necessitate the use of main memory for intermediary data transfers between the FPGA and GPU. Specifically, sharing data between these accelerators involves copying data from the FPGA to the CPU, followed by another transfer from the CPU to the GPU. The specific workflow is as follows:

1. FPGA Host machine (H2F) memory transfer

CXL supports the establishment of a cache-consistent unified memory space among hosts and devices. In traditional accelerator designs utilizing PCIe, it is necessary to program explicit data movement requests for object memory management involving the host and device. In contrast, unified memory enables implicit operations on the same data objects by both host and device, thereby simplifying memory management. Once data is loaded onto the accelerator device, CXL and DMA PCIe transfer methods facilitate local access. In this latter instance, data buffers are explicitly moved to the local device memory. CXL-accessed memory addresses are cached locally, permitting efficient retrieval during subsequent accesses. The unified memory model provided by CXL selectively transfers only the cache lines that have been accessed by the accelerators, unlike PCIe DMA, which requires transmitting the entire address range of the transmission buffer.

The cache consistency and memory sharing capabilities of CXL will enable direct interaction among heterogeneous system hosts, specifically the CPU, FPGA, and GPU, without necessitating preliminary data transfer to the host. This advancement allows for the direct transmission of data from FPGA to GPU, replacing F2H and H2G transitions. Furthermore, this approach will allow kernels to access the local device's shared memory directly. Consequently, the earlier steps (H2F, F2G, and G2H) may be omitted, streamlining operations to focus solely on FPGA kernel computations (F0) and GPU kernel executions (G0). Although there may be a slight increase in runtime due to cache loading requirements, this additional time can be mitigated if the application is designed to pre-fetch data locally during pipeline execution.

Furthermore, as the processes of file extraction and integration occur sequentially, the optimal integration strategy involves executing the entire program in a data flow manner. The simultaneous utilization of FPGA and GPU accelerators enables pipelined communication computing, thereby reducing overall execution time. Applications that operate in a pipelined fashion benefit from enhanced cache coherence, memory sharing, high bandwidth, and low latency. The characteristics of CXL (Compute Express Link) facilitate heterogeneous computing models. The close integration of FPGA and GPU accelerators can significantly enhance application completion times compared to traditional baseline environments.

From the above discussion, it is evident that the significance of CXL extends beyond memory alone; it encompasses various computing forms, including memory, networking, storage, and accelerator cards. Composable server architectures, specifically the Domain-Specific Architecture (DSA), refer to the decomposition of servers into discrete components organized in groups that can be dynamically allocated based on workload requirements. Consequently, data centre racks can function as computing units, allowing customers to select various combinations of cores, memory, and AI processing capabilities. This vision represents a transformative approach to server design and cloud computing. Nevertheless, the implementation of this model presents numerous intricate engineering challenges, with the protocol being of utmost priority. This urgency has contributed to the rapid emergence of CXL 2.0 and 3.0 standards.

Currently, the primary application scenarios for CXL remain centred on memory expansion and resource pooling. Given the present dynamics within the server market, low-core count CPUs will continue to rely on conventional DDR channel configurations for DIMM memory. In contrast, high-core count CPUs will adapt flexibly based on key parameters such as system costs, capacity, power consumption, and bandwidth requirements. CXL memory represents a core advantage of this technology. The potential for performance and cost-effectiveness improvements through memory sharing is virtually limitless. Initial implementations of memory pools can yield a reduction in overall DRAM demand by approximately 10%. Furthermore, a lower latency memory pool could result in savings of around 23% in multi-tenant cloud environments, while memory sharing has the capacity to further reduce DRAM demand by over 35%. Such savings translate into billions of dollars spent annually on DRAM within data centres.

The emergence and popularization of horizontally expanding cloud computing data centres have significantly transformed the market landscape. In this new paradigm, the emphasis has shifted from prioritizing faster CPU cores to the economic and efficient delivery of overall computing performance and its integration. The specialization of computing resources has become a prevailing trend, leading to the dominance of heterogeneous computing within data centres. Various accelerators, such as dedicated ASICs, FPGAs, GPUs, DPUs, and IPUs, are now capable of delivering performance increases exceeding tenfold for specific tasks, utilizing fewer transistors.

The current approach of designing chips to accommodate an entire workload is being supplanted by a more cost-effective strategy that favours the creation of Domain Specific Architecture (DSA) chips, which can be configured to meet the unique demands of particular workloads. By integrating software solutions with heterogeneous computing systems—comprising CPUs along with either integrated or independent GPU, FPGA, or DSA processors—organizations can construct large systems that possess tailored functionalities for diverse applications, such as networking, storage, virtualization, security, databases, video imaging, and deep learning.

In contemporary computing paradigms, the architecture of computing systems has evolved from isolated chips or servers to encompass entire data centres, which now function as the primary computing units. The implementation of a unified device interconnection system, termed Unified Interconnected Fabric, facilitates the interoperability of multiple processors, accelerators, and resource pools in heterogeneous environments.

As a leader in the development of intelligent computing centres, Ruijie Network is committed to delivering innovative product solutions and services to its clients, thereby addressing the demands of the emerging intelligent computing era in partnership with its customers.

-20241217-140814.png)

Related Blogs:

Exploration of Data Center Automated Operation and Maintenance Technology: Zero Configuration of Switches

Technology Feast | How to De-Stack Data Center Network Architecture

Technology Feast | A Brief Discussion on 100G Optical Modules in Data Centers

Research on the Application of Equal Cost Multi-Path (ECMP) Technology in Data Center Networks

Technology Feast | How to build a lossless network for RDMA

Technology Feast | Distributed VXLAN Implementation Solution Based on EVPN

Exploration of Data Center Automated Operation and Maintenance Technology: NETCONF

Technical Feast | A Brief Analysis of MMU Waterline Settings in RDMA Network

Technology Feast | Internet Data Center Network 25G Network Architecture Design

Technology Feast | The "Giant Sword" of Data Center Network Operation and Maintenance

Technology Feast: Routing Protocol Selection for Large Data Centre Networks

Technology Feast | BGP Routing Protocol Planning for Large Data Centres

Technology Feast | Talk about the next generation 25G/100G data centre network

Technology Feast | Ruijie Data Center Switch ACL Service TCAM Resource Evaluation Guide

Silicon Photonics Illuminates the Path to Sustainable Development for Data Centre Networks

How CXL Technology Solves Memory Problems in Data Centres (Part 1)

Featured blogs